Programming With Headphones

Short on time? Jump directly to the video of a robot being programmed with headphones.

Short on time? Jump directly to the video of a robot being programmed with headphones.Background

Maybe, like me, you grew up with a stack of VHS tapes and a VCR in the living room. As cool as it was to have a handful of movies you cared about, those same tapes can be difficult to play now, since your old VCR is likely on its last legs, if it even works at all (the last time I tried to watch a family video, the player literally ate the magnetic tape).

And while you could have painstakingly converted all those movies to digital while your VCR still functioned properly, even digital formats come and go. Trying to play video files encoded with common codecs from a decade ago on today’s computers yields an additional slew of challenges; many are proprietary or need to be hacked to play on current operating systems.

So it goes with most technology: there’s always a wind pushing things forward, leading users to believe that the latest and greatest format will last forever, only to blow on by a few years later. Whatever's left behind barely works as the world continues evolving beyond it.

Designing For Longevity

Of course, most electronic devices we buy today aren’t designed to last at all; we know that things change too quickly for a gadget you buy today to matter 10 or 20 years from now. Even if every component within a device is built to last, there’s still a communication gap: protocols, cables, connectors, and applications all evolve so rapidly that even if the device still works, it typically can't talk with future devices or update its own functionality.

But rapid obsolescence isn’t true of all technologies. A few things persist almost by accident, such as early radios that still work beautifully (although their days seem numbered, as music moves toward online streaming and digital standards).

And some things never change. As humans, we hear the world around us with our ears, and no matter how fancy technology gets, the nature of audio itself (the mechanical waves vibrating through the air) will remain relatively constant. How audio is stored, modified, recorded, encoded, transferred, and produced are a different story, but the sound itself will be almost identical.

Using audio to transmit data is nothing new -- perhaps you remember those two long decades of dial-up and the warbling of a modem to signal your connection to the internet? But for the most part, audio-encoded data has been relegated to communicating over phone lines and has slowly faded away as the world moved to broadband.

To take it a step further, what if our devices could directly talk over the air, just as we do with one another? Using audible sound (20Hz to 20kHz) as the communication channel, future electronics could continue to interact with other devices and the outside world for decades, even as the fundamental nature of computers (and their snazzy paraphernalia) transforms entirely.

Meet Canny the Robot

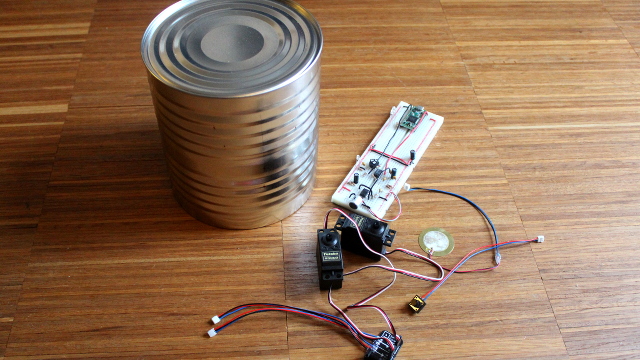

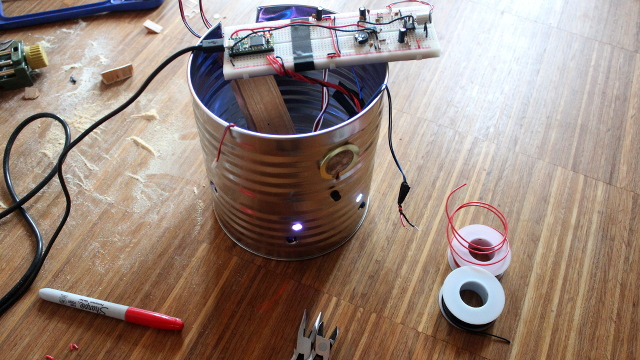

To explore the challenges of an audio-only communication channel and provide a tangible proof of concept, I built a robot called Canny. A simple realtime interpreter running on Canny’s microcontroller (an Arduino-like Teensy 3.1 board) can update its eye color, eyebrow angle, and musical emissions.

Canny has red, green, and blue LEDs in each eye that combine to allow a wide range of colors, useful for expressing a mood or indicating status while programming. Above each eye is a servo that can change the angle of the eyebrow to further augment Canny’s expression. A piezo speaker stands in for a mouth, letting the robot play a range of notes. When a user presses Canny’s button nose, the robot performs a combination of color, motion, and sound as specified by the current program.

With the help of a microphone on the left side of Canny’s head, a series of high frequency tones can be used to reprogram the robot via a pair of headphones. Opposite the microphone is a light sensor used to determine when headphones are present and thus allow programming to take place.

Sending Data via Audio

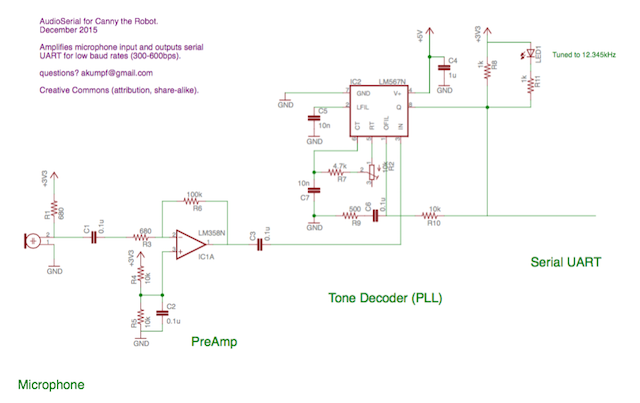

To encode the 1s and 0s of data transmitted via the headphones, audio frequency shift keying (known as AFSK) is sent from a webpage. Simply put, AFSK uses two frequencies and shifts between them to encode the data. It's important that the frequencies chosen work with standard headphones, so I picked 12,345Hz and 9,876Hz as the high and low values, respectively: easy to differentiate and still well below the common 20,000Hz audio cutoff (they're also fun numbers since they count sequentially up and down in order).

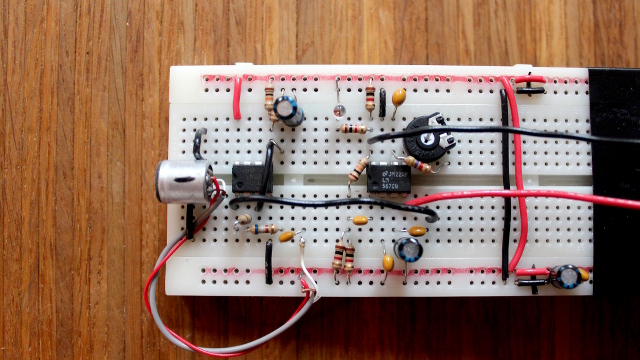

While it’s quite feasible for a microcontroller (such as Arduino) to directly process the incoming audio from a microphone and decode the data, the processing overhead, precise timing required, and need for error detection can be major hurdles. So instead, I decided to decode the data in hardware (via a simple PLL circuit) that in turn outputs serial UART data that most microcontrollers can manage directly and access with just two lines of code:

The circuit I constructed is very basic and works great at low baud rates (such as 300 or 600 bps). Naturally, environmental noise can affect data, so a basic form of packetization (via a start command, length value, and checksum) was also implemented.

The overall communication speed can also be greatly increased with more complex modulation schemes (such as QAM) and application-specific demodulation hardware, but that was beyond the scope of my initial tests.

Open Source

Everything about Canny is released as open source, so you can experiment with adding headphone programming to your own projects!

Note that the heaphone programmer produces annoying high frequency audio, so save it for the robots (and spare your ears). All the code that generates the beeps and boops is included in the page's sourcecode; feel free to download a copy and modify it as needed.

This open research was published by Adam Kumpf on December 4th, 2015.